Imagine that you’re trying to predict consumer demand for your product based on the present situation.

How do you do this accurately so that you are able to build your strategy around a highly probable estimate?

Enter the Markov Process.

The traditional approach to predictive modelling has been to base probability on the complete history of the data that is available and try to understand the underlying themes and trajectories, but that’s not the case when it comes to Markov chains.

We tend to analyze the past to a great extent and comb through previous details, but that is not always the best way to move forward if you’re looking to make less complex predictive analysis and have limited data available.

The Markov Process is a method of predictive modelling that is fast, accurate, and bases all future predictions on the present situation.

Whether it’s predicting the outcome of cricket matches or understanding the causes of severe pneumonia in children, the Markov process is a fairly common methodology utilized by data scientists in the quest to create more accurate predictive analytical models at a faster rate.

Now, many of you may be thinking what is Markov process and what does it have to offer the world of data science? So, let’s take a look and see what this process is all about.

What is the Markov Process & What are Markov chains?

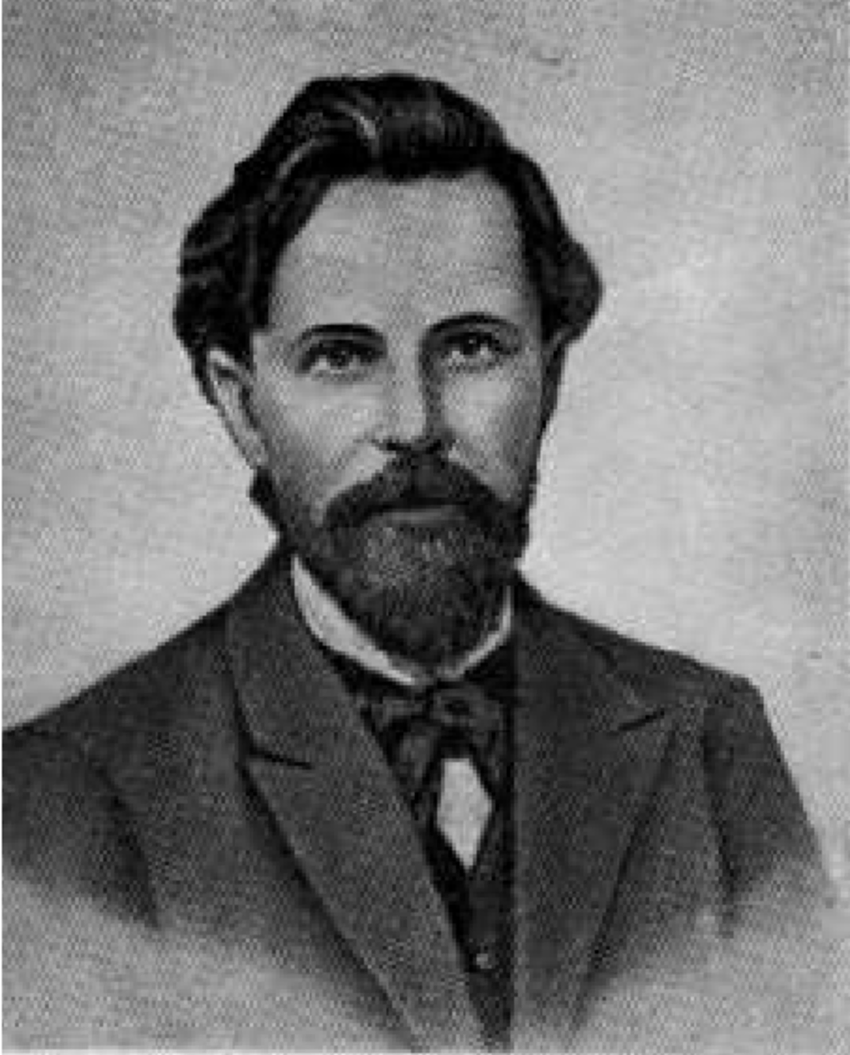

Named after the Russian mathematician Andrey Markov, a Markov Process is defined as a stochastic process that satisfies the Markov property.

This refers to the memoryless property of a stochastic process, i.e., if the conditional probability distribution of future states of the process (dependent on both past and present states) depends only upon the present state and not on the sequence of events that preceded it.

So, to simplify all of this, let’s talk a little bit about the Markov Assumption, which is what the process is based on.

The Markov assumption is that the Markov property holds the future, given that the present is independent of the past.

This means that you get all your gravy from the present situation, and there’s no value that you can extract from the past.

Now, the next term that you need to familiarize yourself with is the Markov Chain, which is where all the real meat lies.

The Markov decision process is applied to help devise Markov chains, as these are the building blocks upon which data scientists define their predictions using the Markov Process.

In other words, a Markov chain is a set of sequential events that are determined by probability distributions that satisfy the Markov property.

These are what the essential characteristics of a Markov process are, and one of the most common examples used to illustrate them is the cloudy day scenario.

Imagine that today is a very sunny day and you want to find out what the weather is going to be like tomorrow. Now, let us assume that there are only two states of weather that can exist, cloudy and sunny.

There can be no deviations from these states according to this model.

So, given your experiences with the weather in the past and the fact that you’ve collected the data and crunched the numbers, the odds of the next day being sunny sits at 0.75.

So, by extension, it means that the odds of the next day being cloudy is 0.25, given that there are only two possible future states that the weather can be.

Now, another assumption to be made here is that of time-homogeneity, which states that the conditions that the predictive model is based on do not change over a period.

By adhering to these assumptions and transition models, you can now predict the weather for ‘n’ number of coming days using the transition model based on today’s climate.

Business Applications of Markov Decision Process

We hope that you’re a bit familiar with the basic tenets of the Markov process by now. But you may be wondering what the business-relevant characteristics of Markov process are?

So, let’s move ahead and look at the business applications of this process and how it can be used to predict customer movement and growth trajectories. Let’s take a sample use case to illustrate-

A chocolate company wants to partner with either of the two leading players in the industry- Nestle and Cadbury. Currently, Nestle holds a 50% share of the market, with Cadbury having the other 50% share of the chocolate market.

They now hire one of the top market research and statistics companies to find out which of the companies is likely to be in a better position in the next month.

The market research company finds that:

Probability of a customer staying with Cadbury = 0.8

Probability of customer switching from Cadbury to Nestle = 0.2

Probability of a customer staying with Nestle = 0.6

Probability of a customer switching from Nestle to Cadbury = 0.4

These predictions clearly show that customers are more willing to stay with Cadbury than they are to stay with Nestle and that there is a good, but less than 50% chance that Nestle customers may move to Cadbury.

To calculate their market share after the month, we simply have to do the following matrix multiplication:

Current State * Transition Matrix = Final State

Here, the current state will be (50/50), as both have a 50% market share.

The transition Matrix will have in the first row the percentage of customers who will a) be loyal to Cadbury and b) switch to nestle, that is (80/20).

The second row will have the percentage of customers who are a)switching from Nestle to Cadbury and b) staying loyal to Nestle, which is (40/60).

In a nutshell, that is how the Markov decision process works. Why don’t you try a simple test and calculate what the final state will be with this data we’ve given you? Mail the answer to us, and we’ll tell you if you’re right!

Markov Process

So, there you have it, hope this answered your questions about what is Markov process and what the characteristics of the Markov process are.

A multitude of businesses uses the Markov process, and its real-world applications are immense. It is applied a lot in dualistic situations, that is when there can be only two outcomes.

A great example of this is the banking sector, where most banks derive revenue from the loans that they have given out.

Therefore, it is essential to accurately predict the likelihood of loans being repaid, which is where Markov chains can be of great assistance.

Another exciting LoB where the potential of Markov chains is only just being explored is sales and marketing.

Markov chains can be effectively used to predict customer loyalty and retention, another dualistic situation where the customer either stays with the company or doesn’t.

These are just a couple of conventional industry uses for Markov Process, but its possibilities are endless.

Thus, understanding this process is essential for anyone who wants to become a successful data scientist, which is one of the most lucrative career options in the market today.

Wrapping Up

Building a better understanding of the Markov decision process can be crucial to honing your predictive analytics skills.

However, it is also essential to learn about the other facets of data science if you genuinely want to become a top-notch data scientist. You need to truly understand what data science is all about before you decide to pursue it as a career option.

Check out our data science courses now to find out what we can teach you about the Markov Process. You can also watch the video below to know more about what it takes to succeed in the field of Big Data and Data Science.